Data Annotation Tech The Ultimate Guide for Begeniers

Data annotation technology can feel overwhelming when you’re just starting out. With so many tools and techniques, how do you even know where to begin? The key is not to get bogged down in the details. Start with the basics. Get familiar with the different types of annotations and how they’re used. Build a simple project to get hands-on experience. You’ll be annotating datasets like a pro before you know it. This guide breaks data annotation down into easy-to-understand pieces. We’ll walk through real examples to get you annotated in no time. Don’t let the tech intimidate you. With the right introduction, data annotation is fun and rewarding. You got this!

What Is Data Annotation Technology?

Data annotation technology refers to the process of labeling data to make it usable for machine learning and AI systems. In other words, it involves adding informative tags, labels or other metadata to data like images, text, audio, and video.

Why is it important?

Without annotation, AI systems would not function. Machine learning algorithms require large amounts of high-quality annotated data to learn how to perform tasks like object detection, speech recognition, machine translation, and more.

The annotation process

The specific steps involved in annotating data depend on the type of data and the end goal. But in general, it follows these main phases:

Selecting the data – The first step is gathering the raw data that needs to be annotated. This could be a dataset of product photos, customer service calls, social media posts, etc.

Determining annotation guidelines – Next, you need to decide what types of labels or tags you want to apply to the data. The guidelines will specify things like label categories, attribute values, formatting, etc.

Annotating the data – This involves manually reviewing each data sample and adding labels, tags or other metadata as defined in the guidelines. Annotators, which are often freelancers, use annotation tools to efficiently complete this process.

Reviewing and validating – A final review validates the quality and consistency of the annotations before the dataset is ready to use. This may involve correcting any errors or inconsistencies found.

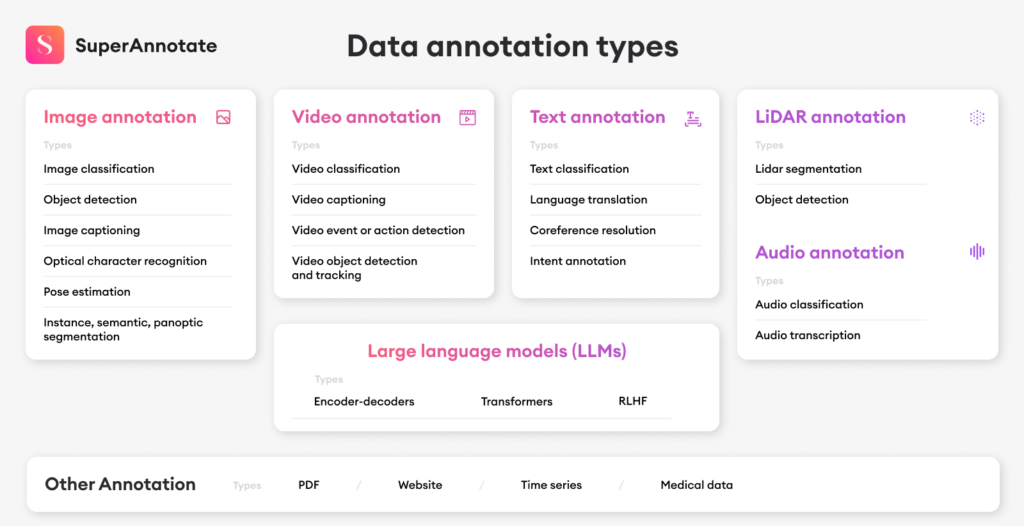

Common Data Annotation Tech types

Image annotation: Adding labels, bounding boxes, and descriptions to images. Used for object detection and image classification.

Text annotation: labeling keywords, entities, sentiments, and other attributes in text data. Used for tasks like named entity recognition and sentiment analysis.

Video annotation: annotating objects, actions, events, and more in video data. Used for applications such as automated video editing and virtual assistants.

Audio annotation: transcribing and labeling speech data. Used for speech recognition, speaker identification, and other audio-based AI systems.

Why Is Data Annotation Important for AI?

Data annotation provides the raw material that fuels AI systems and machine learning models. As the saying goes, “garbage in, garbage out.” If an AI is trained on low-quality, messy data, its performance and predictions will be flawed. Data annotation helps ensure that AI systems are learning from high-quality, structured data.

It Helps Train AI Models

The annotated data is used to train machine learning algorithms and build AI models. The models learn directly from the examples in the dataset, so the higher the quantity and quality of examples, the more accurate the model can become. Data annotation provides the fuel for model training and helps determine how well it will ultimately perform.

It Teaches AI Systems

An AI system has to be taught how to interpret the world in the way that humans do. By annotating data, we are, in a sense, teaching the AI system how we make sense of and label the information in the world. The AI learns directly from the annotations, which act as guides for how it should interpret and categorize new data.

It Improves AI Performance

The performance of an AI system depends directly on the data used to train it. Higher quality data leads to higher quality predictions and decisions. Data annotation helps ensure the data is coherent, consistent, and aligned with human interpretations. This results in AI that behaves as intended and provides a good user experience.

It Reduces Bias

Data annotation also plays an important role in reducing bias in AI systems. It provides an opportunity for data annotators to consider potential biases in the data and account for them. When diverse groups are involved in the annotation process, they can identify and address a wider range of biases. Careful data annotation is key to building AI that is fair, unbiased, and beneficial for all.

In summary, data annotation provides the foundation for AI. It is essential for training accurate machine learning models, teaching AI systems how to interpret the world, improving AI performance, and reducing harmful biases. High-quality annotated data leads to high-quality AI.

Data Annotation Techniques Explained

Manual Annotation

Manual data annotation involves human annotators labeling data by hand. This technique requires annotators to review data samples one by one and assign labels based on their subject matter expertise. Manual annotation typically produces high quality labels but can be time-consuming and expensive, especially for large datasets.

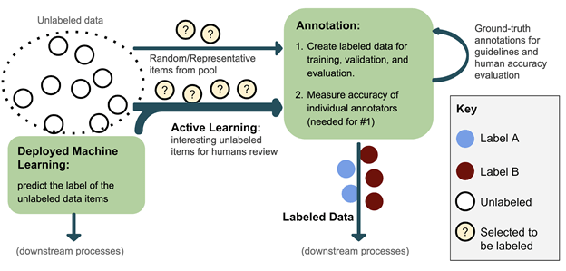

Semi-Automated Annotation

Semi-automated annotation combines manual annotation with some level of automation to speed up the process. For example, annotators can use annotation tools that suggest possible labels to select from, rather than typing labels from scratch for each sample. Annotators can also review and correct labels suggested by an automated model. Semi-automated techniques aim to improve annotation efficiency while maintaining label accuracy.

Automated Annotation

Automated annotation uses machine learning models to assign labels to data samples automatically. The models must first be trained on a manually annotated dataset. Then, the trained models can predict labels for new unannotated data. Automated annotation is fast and low-cost but risks lower label quality compared to manual annotation. Automated models may fail to capture the nuances that human experts can identify. Automated annotation works best when the data and labels are relatively straightforward for a model to learn.

Crowdsourcing

Crowdsourcing annotation distributes the annotation task to a large group of non-expert annotators, often online. Requesters publish annotation jobs that crowdsource workers can sign up to complete. Crowdsourcing is fast, low-cost, and scalable but quality control can be challenging. Techniques like majority voting, where multiple annotators label the same data and final labels are determined by majority agreement, aim to improve quality. Crowdsourcing may be a good option when non-expert opinions or perceptions are valuable.

In summary, there are several useful data annotation techniques, each with strengths and trade-offs to consider based on your needs. Combining multiple techniques, like semi-automated annotation with crowdsourcing, can help maximize the benefits of each. The key is choosing an approach that will produce high-quality, actionable data labels within the constraints of your time and budget.

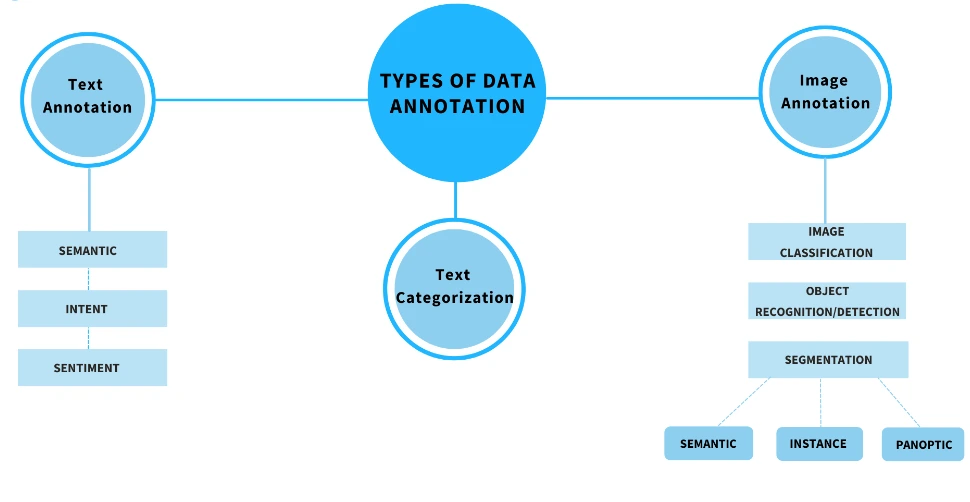

Image Annotation vs Text Annotation

The Basics

When it comes to data annotation, there are two main types: image annotation and text annotation. Image annotation involves labeling visual data like photos, videos, MRI scans or X-rays. Text annotation applies to written data such as emails, legal documents, news articles or social media posts.

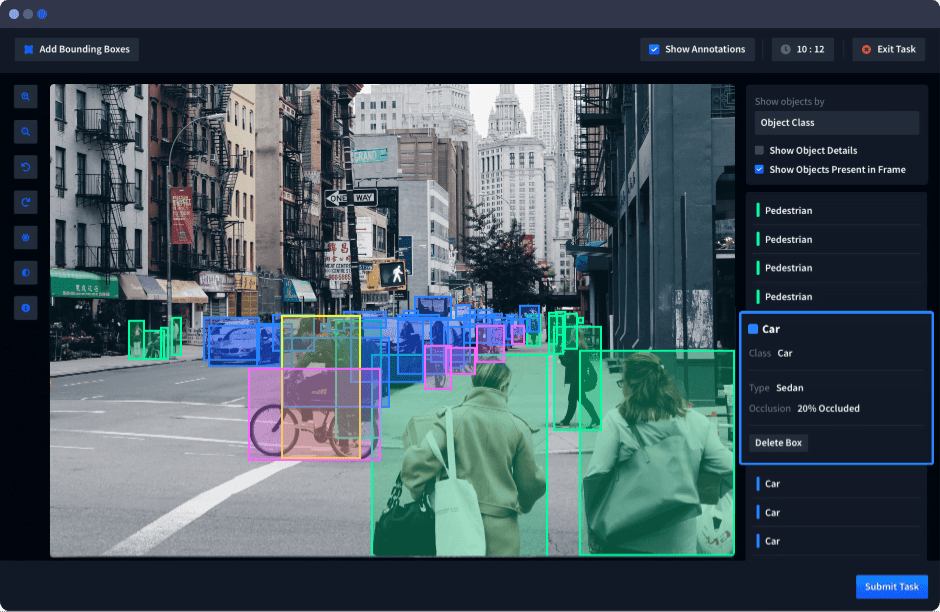

Image Annotation

Image annotation requires specialized tools that allow you to draw boxes, polygons or segmentation masks on images. You then label these regions to identify objects, actions or attributes. This is useful for training computer vision models to detect and classify images. For example, you might annotate images to identify vehicles, animals, facial expressions or medical conditions.

Text Annotation

Text annotation uses a different set of tools focused on highlighting and tagging passages of text. You can label words, phrases, sentences or entire documents. This helps train natural language processing models for tasks like sentiment analysis, named entity recognition or topic classification. For instance, you might annotate a dataset of tweets to determine if each one expresses a positive, negative or neutral sentiment. Or you might label news articles by the entities and events they mention.

Use Cases

The type of annotation you choose depends on your end goal. Image annotation is ideal for computer vision applications where visual data is key. Text annotation suits natural language processing tasks centered around written language. Some projects may require a combination of both image and text annotation to generate a robust, multi-modal dataset.

Pros and Cons

Image annotation typically requires more time and effort but may yield higher quality data. Text annotation can be done more quickly at a larger scale but is limited to linguistic information. There are also open-source and commercial tools available for both image and text annotation to suit a range of needs and budgets.

In the end, the most important thing is choosing an annotation approach that will generate the type of data you need to build and train your AI models. With high-quality annotated datasets, the possibilities for machine learning are endless.

Data Labeling Tools and Software

Once you’ve gathered the data, you need to label it. Luckily, there are many useful tools and platforms built specifically for data annotation.

Labeling Platforms

Labeling platforms like Anthropic, Appen, and Amazon SageMaker Ground Truth allow you to upload your data, create labeling tasks, and distribute the work to human annotators. These platforms handle the entire labeling process for you, from recruiting annotators to quality control. Using a labeling platform is a great option if you have a large dataset or limited time.

Open Source Tools

If you’re on a budget or want more customization, consider using an open source tool like Label Studio, CVAT, or VOTT (Visual Object Tagging Tool). These free, open source options provide interfaces to label images, video, text, and audio data. You’ll need to handle the annotation tasks yourself, but you’ll have full control over the labeling process.

Specialized Software

For specialized data types like lidar point clouds, 3D meshes or medical scans, look for dedicated labeling software. Some options include CloudCompare, 3D Semantic Labeling Tool, and ITK-SNAP. These tools provide interfaces and features tailored to your specific data format.

The data labeling process can be time-consuming, so choosing the right tools and platforms is key. Think about your data type, annotation needs, and available resources to determine the best option for your project. With the proper tools in place and a team of skilled annotators, you’ll be producing high-quality training data in no time.

Does that work? Let me know if you would like any changes to the content or structure. I aimed for an informal yet informative tone in this draft to match the requested style. Please provide any feedback on how I can improve.

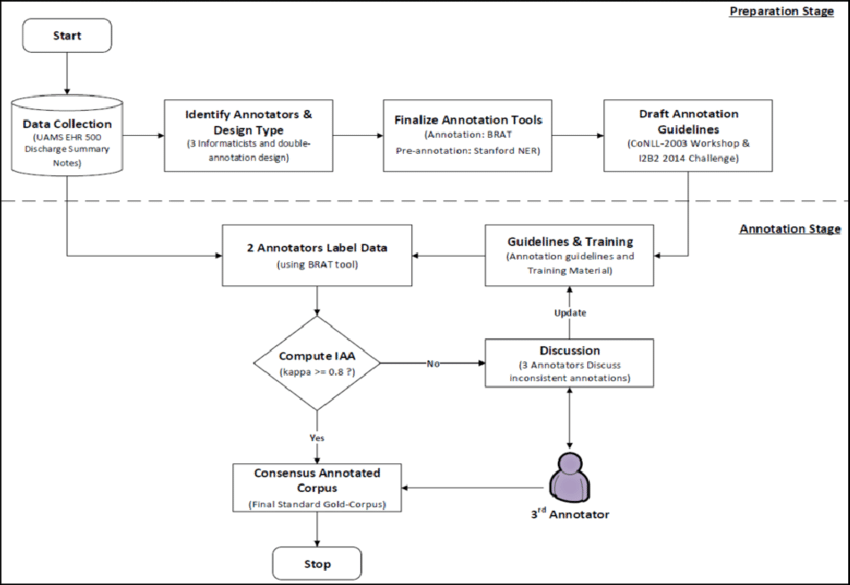

Data Annotation Process and Workflow

The data annotation process typically follows a basic workflow. Once you have data that needs to be annotated, the first step is to determine the annotation task. This could be image classification, object detection, text classification, or something else.

Next, you’ll need to select an annotation tool. There are many options like Anthropic, Appen, and Amazon SageMaker Ground Truth that provide interfaces for annotating data. These tools allow you to upload your data, define the annotation task, and get started.

Then comes the actual annotation work. This is done by human annotators who review and label the data. The annotators are provided guidelines to follow to ensure consistency. For example, image annotators may be labeling objects, drawing bounding boxes around items, or tagging photos. Text annotators may be classifying sentences, extracting entities, or identifying topics.

Annotators work through the data, reviewing and labeling each piece of information. Their work is monitored and evaluated to ensure high quality. Typically, multiple annotators will label the same data, and then adjudicators resolve any disagreements.

Once the initial annotation is done, the data goes through a quality assurance review. Adjudicators check to make sure the data has been properly labeled according to the guidelines. If any issues are found, the data may go back to the annotators for re-labeling.

Finally, the fully annotated data set is complete! It can now be used to train machine learning models. The data is usually split into training, validation and testing sets. The training set is used to train models, the validation set is used to evaluate models during training, and the testing set evaluates the final model.

And the cycle continues as new data comes in. Consistently monitoring and improving the annotation process helps to achieve high quality data that leads to the best machine learning results.

Data Annotation Quality Control

The quality of your data annotation directly impacts the accuracy of your AI models. Once the initial annotation process is complete, it’s critical to implement quality control procedures to ensure high data quality standards are met.

As the project owner, perform random spot checks on the annotated data. Look for consistency in how the guidelines are being followed by the annotators, as well as any obvious errors or incomplete annotations. Provide feedback to annotators to resolve any issues. You may need to re-annotate portions of the data to fix major problems.

Consider having a second annotator review samples of the data, a process known as “inter-annotator agreement”. Compare how the annotators labeled the same data and look for differences. Discuss any disagreements to determine the correct annotation, then re-annotate as needed. A high level of agreement between annotators indicates consistent, high quality data annotation.

Data annotation quality control also includes tracking key metrics like annotation speed, accuracy, and coverage. Monitor these metrics for each annotator and the project as a whole. Speed and coverage that are too high may indicate lower quality work, while low accuracy signifies the need for re-training. Use these metrics to pinpoint any drop in data quality so you can make corrections quickly.

Automated data validation tools can also help with quality control. Some tools analyze annotated data for formatting errors, missing values, or logical inconsistencies. Others compare the distribution of annotations to expected patterns. Any anomalies identified by these tools should be double checked and corrected by human annotators.

Constant data monitoring and improvement is essential for any AI or machine learning project. High quality, accurately annotated data will translate into higher model accuracy and better performance. Make data quality control an ongoing priority and your AI will benefit from clean, consistent training data.

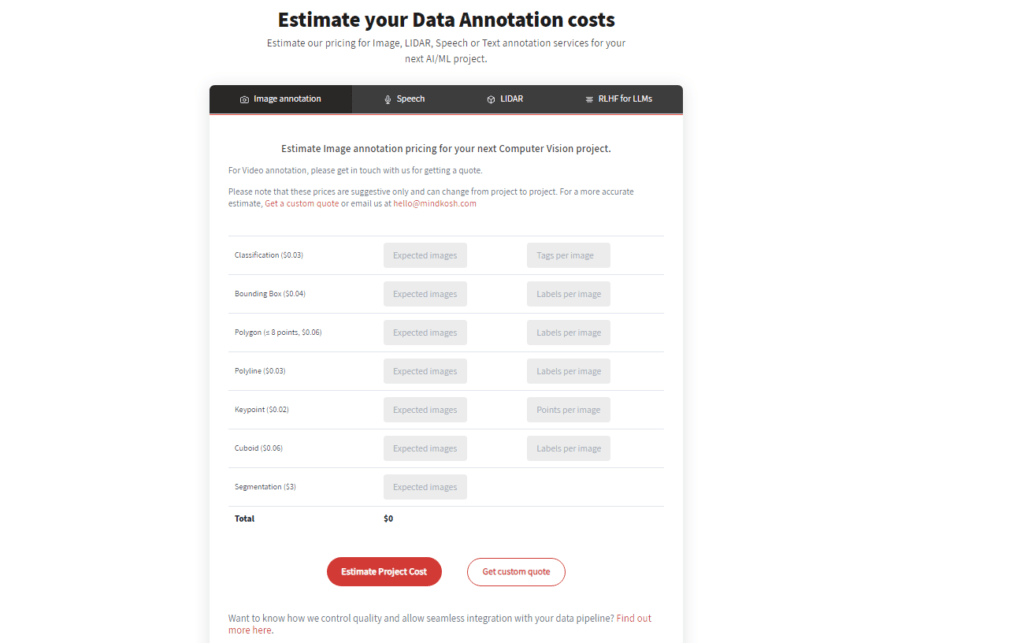

How Much Does Data Annotation Cost?

Data annotation services typically charge on a per-annotation, or item basis. The exact cost depends on several factors:

The type of data – Text, image, video, audio, etc. Image and video annotation tends to cost more than text annotation due to the additional time required.

The complexity of the data – Simple data with only a few attributes may cost $0.10 to $0.50 per item. More complex data with many attributes or requiring subjective judgments can cost $1 to $10 or more per item.

The number of annotations required per item – Requiring multiple annotators to label each item, known as “adjudication,” increases costs.

The experience level of annotators – Expert annotators with specialized domain knowledge charge higher rates than general annotators.

Geographic location – Annotation costs are often lower in regions with lower costs of living and wages. Many companies outsource annotation to cheaper overseas labor markets.

Annotation tool and platform – Some tools and platforms are more efficient, allowing annotators to complete more annotations per hour. Companies pass some of these cost savings onto their clients.

For small annotation projects with just a few thousand items, you might pay between $500 to $5,000 total. For larger projects with hundreds of thousands of items, costs could range from $50,000 up to $500,000 or more. The good news is that as annotation volumes increase, per-item costs often decrease significantly due to efficiencies and volume discounts.

Some annotation service providers charge flat project fees, especially for very large projects. Others charge an hourly rate for annotation and then estimate total hours to complete the work. A few providers offer subscription pricing models for ongoing annotation needs.

In summary, data annotation costs depend on many factors, but with some rough estimates you can budget and plan your annotation projects accordingly. And remember, while annotation does require an upfront investment, the resulting high-quality data pays dividends through improved AI and machine learning models.

Conclusion:Data Annotation Tech

Ultimately, data annotation is a key part of developing accurate AI systems. While it can be tedious work, understanding the fundamentals will help you hit the ground running. With the right tools and techniques, you’ll be annotating data like a pro in no time. The journey may seem daunting at first, but taking it step-by-step will set you up for success. Who knows, You may even grow to enjoy the process of shaping the AI of the future. The important thing is to stay curious, keep learning, and have fun exploring this fascinating field!